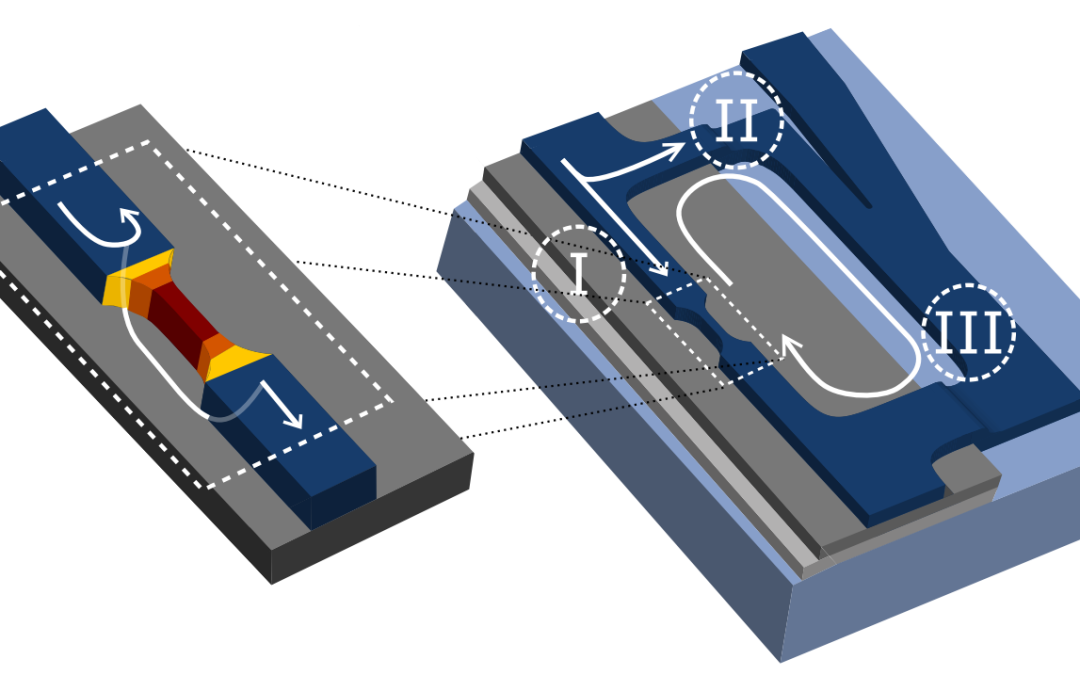

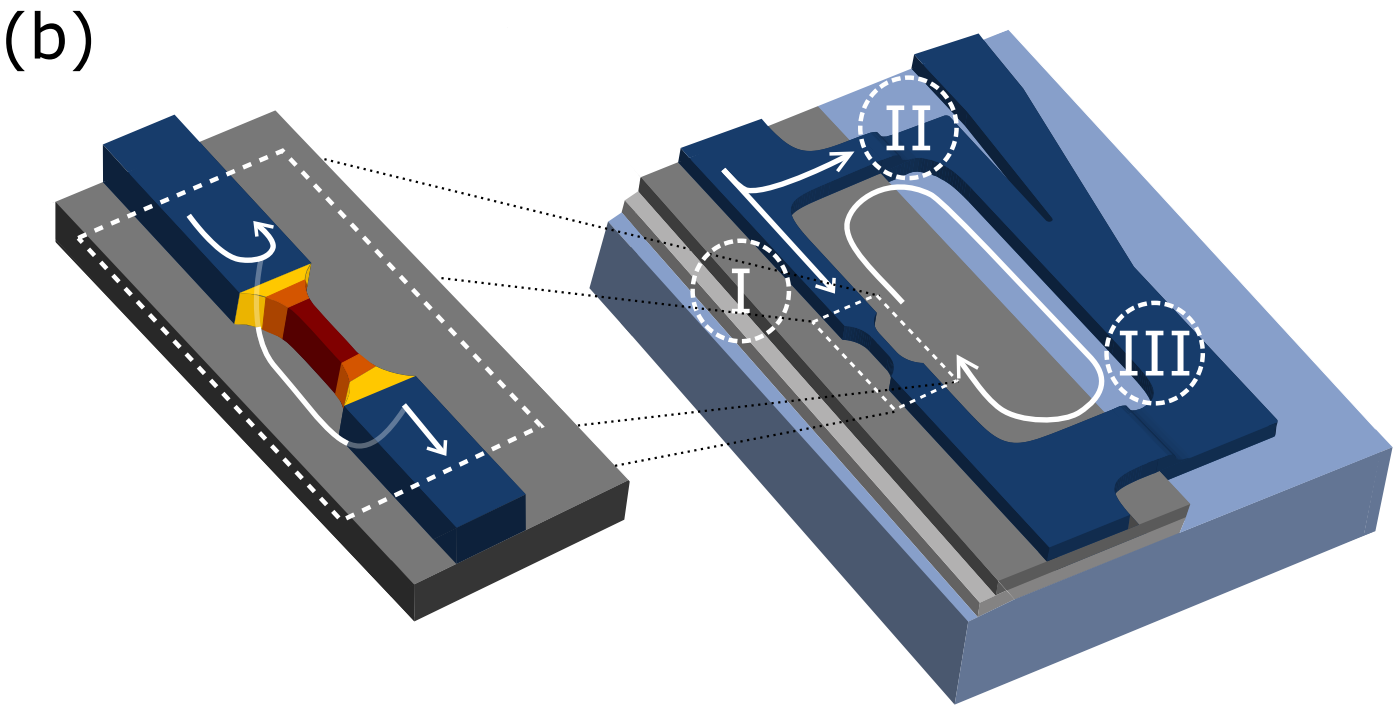

Training of deep neural networks (DNNs) is a computationally intensive task and requires massive volumes of data transfer. Performing these operations with the conventional von Neumann architectures creates unmanageable time and power costs. Recent studies have shown that mixed-signal designs involving resistive crossbar architectures are capable of achieving acceleration factors as high as 30 000 × over the state of the art digital processors. These approaches involve utilization of non-volatile memory elements as local processors. However, no technology has been developed to-date that can satisfy the strict device requirements for the unit cell. This paper presents the superconducting nanowire-based processing element as a crosspoint device. The unit cell has many programmable non-volatile states that can be used to perform analog multiplication. Importantly, these states are intrinsically discrete due to quantization of flux, which provides symmetric switching characteristics. Operation of these devices in a crossbar is described and verified with electro-thermal circuit simulations. Finally, validation of the concept in an actual DNN training task is shown using an emulator.

A complete description of the work may be found here.