Yannan Nellie Wu, Vivienne Sze, Joel S. Emer

Abstract:

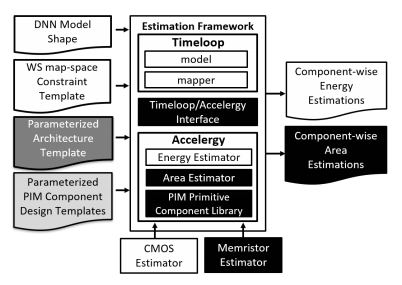

Processing-in-memory (PIM) deep neural network (DNN) accelerators, which aim to improve energy/area efficiency of DNN processing by integrating computation into data storage, have gained popularity in recent years. Therefore, it is attractive to have a generally applicable framework that is able to quickly provide insights into the various trade-offs involved in PIM accelerator designs. We present an architecture-level design estimation framework for PIM accelerators that allows easy representations of the designs with provided architecture templates and component design templates, performs analytical runtime simulations, and produces technology-dependent area and energy estimations. We show that the framework can be easily used to evaluate state of the art PIM accelerator designs; it achieves 95% accurate total energy estimations and reproduces exact area breakdowns of the components in the design. Related open-source code is available at http://accelergy.mit.edu/. Index Terms—Architecture-Level Design Evaluations, Deep Neural Network Accelerators, Processing-in-Memory