J. Noraky, C. Mathy, A. Cheng, V. Sze

[ PDF ]

Abstract:

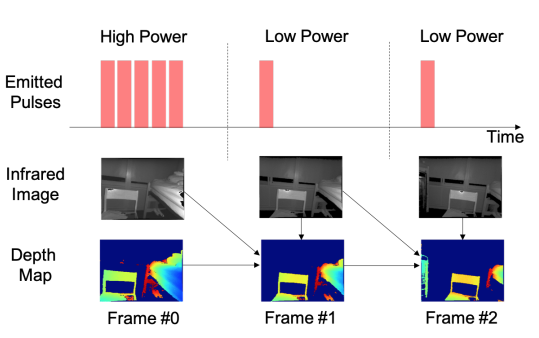

Time-of-flight (TOF) cameras are becoming increasingly popular for many mobile applications. To obtain accurate depth maps, TOF cameras must emit many pulses of light, which consumes a lot of power and lowers the battery life of mobile devices. However, lowering the number of emitted pulses results in noisy depth maps. To obtain accurate depth maps while reducing the overall number of emitted pulses, we propose an algorithm that adaptively varies the number of pulses to infrequently obtain high power depth maps and uses them to help estimate subsequent low power ones. To estimate these depth maps, our technique uses the previous frame by accounting for the 3D motion in the scene. We assume that the scene contains independently moving rigid objects and show that we can efficiently estimate the motions using just the data from a TOF camera. The resulting algorithm estimates 640 × 480 depth maps at 30 frames per second on an embedded processor. We evaluate our approach on data collected with a pulsed TOF camera and show that we can reduce the mean relative error of the low power depth maps by up to 64% and the number of emitted pulses by up to 81%.