James Noraky, Vivienne Sze

Low Power Depth Estimation for Time-of-Flight Imaging

Abstract:

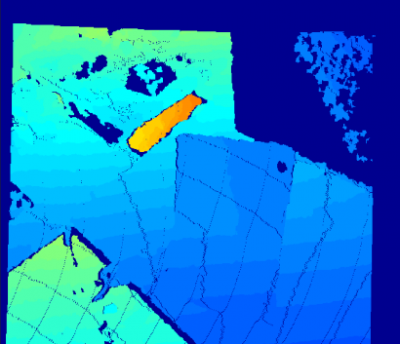

Depth sensing is used in a variety of applications that range from augmented reality to robotics. One way to measure depth is with a time-of-flight (TOF) camera, which obtains depth by emitting light and measuring its round trip time. However, for many battery powered devices, the illumination source of the TOF camera requires a significant amount of power and further limits its battery life. To minimize the power required for depth sensing, we present an algorithm that exploits the apparent motion across images collected alongside the TOF camera to obtain a new depth map without illuminating the scene. Our technique is best suited for estimating the depth of rigid objects and obtains low latency, 640×480 depth maps at 30 frames per second on a low power embedded platform by using block matching at a sparse set of points and least squares minimization. We evaluated our technique on an RGB‑D dataset where it produced depth maps with a mean relative error of 0.85% while reducing the total power required for depth sensing by 3×.